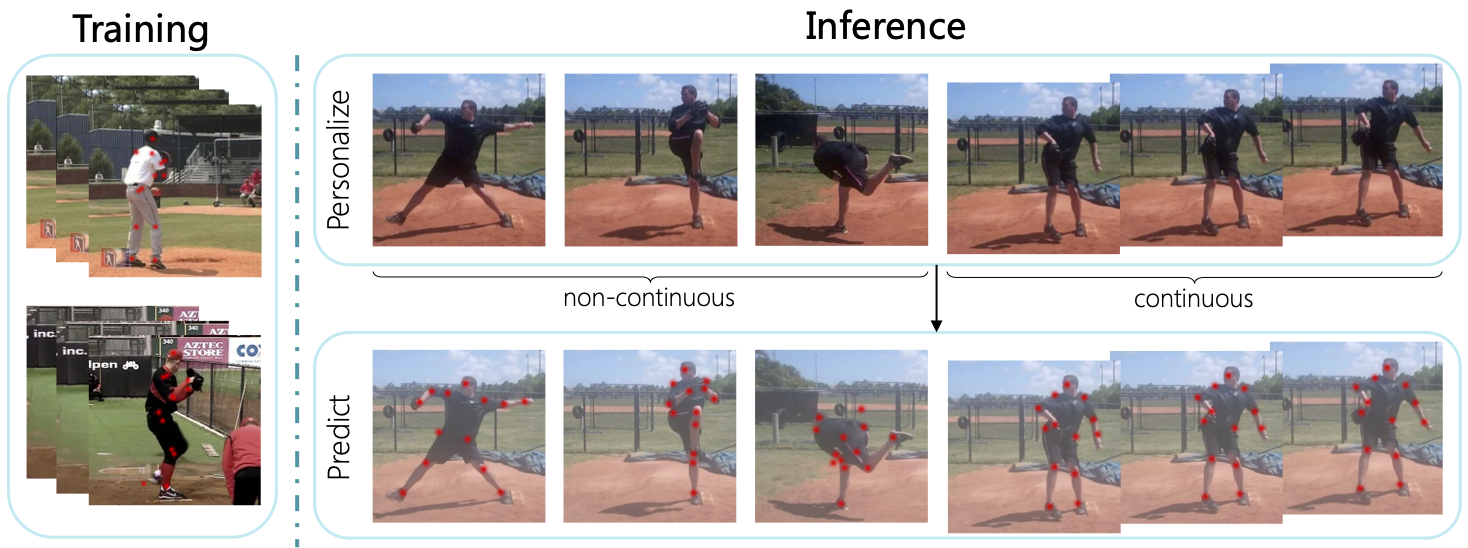

We propose to personalize a human pose estimator given a set of test images of a person without using any manual annotations. While there is a significant advancement in human pose estimation, it is still very challenging for a model to generalize to different unknown environments and unseen persons. Instead of using a fixed model for every test case, we adapt our pose estimator during test time to exploit person-specific information. We first train our model on diverse data with both a supervised and a self-supervised pose estimation objectives jointly. We use a Transformer model to build a transformation between the self-supervised keypoints and the supervised keypoints. During test time, we personalize and adapt our model by fine-tuning with the self-supervised objective. The pose is then improved by transforming the updated self-supervised keypoints. We experiment with multiple datasets and show significant improvements on pose estimations with our self-supervised personalization.

Visualization

We use a Transformer model to model the relation between the self-supervised keypoints and the supervised keypoints. In the following visualization, the images from the left to the right are: the original image, the image with self-supervised keypoints, the image with supervised keypoints, and the reconstructed image from the self-supervised task. The arrows between keypoints indicate their correspondences obtained from the affinity matrix with the Transformer. Warmer color indicates higher confidence.

Penn Action

Input

Image

Self-supervised

Keypoints

Supervised

Keypoints

Reconstructed

Image

BBC Pose

Input

Image

Self-supervised

Keypoints

Supervised

Keypoints

Reconstructed

Image